Blog automation using ChatGPT and other AI-text-generation or machine learning model tools is getting increasingly popular. Automating content has become a whole growth segment of the web marketing and online content business.

While these tools are excellent assistants, they are in my opinion being allowed too much autonomy for many websites and it is hurting them rather than helping them.

Determining whether a piece of text was generated by GPT-4 or written by a human can be challenging. Both humans and advanced tools can produce high-quality articles.

However, there are some tell-tale signs you can look for. Let’s take a look at some ways to detect AI writing, and at the same time find ways to improve your own AI-assisted articles!

Get personalized content recommendations and answers drawn from our website. Simply type your question or topic of interest, and our AI assistant will help you find relevant articles, tips, and insights. You can also have a natural conversation to explore topics in more depth.

Leaving in the Helpful Responses

ChatGPT and other interactive machine-learning tools provide helpful, chatty responses before providing answers. The most lazy of users do not even bother removing them.

“Certainly!”

“Great question”

“As an AI language model …”

These phrases getting left in articles (and Tweets, college assignments, blog comments, etc.) are obvious signs of ChatGPT copy and paste. They are littering the web more and more, showing the lack of care the AI tool users have for their impact.

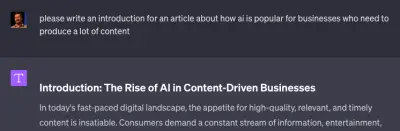

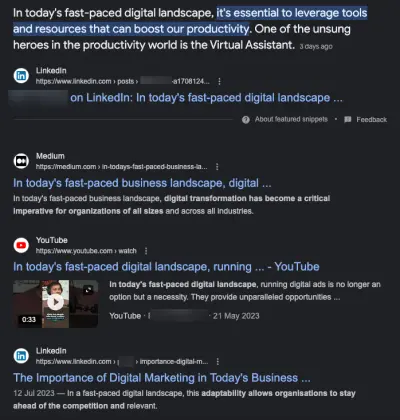

Cliche Introductions

I don’t know how it happened but AI seems to lean heavily on this type of introduction:

How many articles with “In today’s fast-paced digital landscape” and similar approaches must have been in the training data?

This is a sure sign that AI has been used and it is right there in the first sentence of many articles!

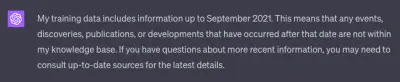

Out of Date Information

Not all popular AI tools have up-to-date information at their digital fingertips because training these systems takes a long time and a lot of data.

For example, ChatGPT-4 is missing any information after September 2021. Fine if you are asking about Michelangelo the artist, but not so great if you are asking about who played Michelangelo in the Movie Teenage Mutant Ninja Turtles: Mutant Mayhem.

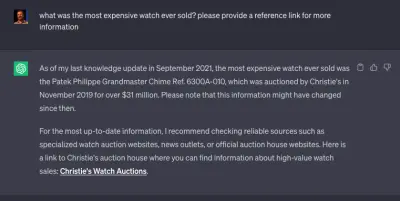

Hallucinations and Fake URLs

Ask ChatGPT to provide references, sometimes it will comply.

But unlike Google Bard and Bing Chat, ChatGPT does not have access to the web … so how does it achieve that?

It makes them up!

OK, that might be a bit mean, but hallucinations are a thing with these advanced language models because part of how they work is kind of like auto-complete.

Rather than always pulling the precise text from a large database, these tools figure out what the next piece of text should be, or fill in the gaps with something that works. You can never be entirely sure if what they are providing is factual or just reads like it might be!

Each version of ChatGPT has improved over the last, but you must still be vigilant.

Over-Optimization

AI tools are not built to have subtlety, so if you ask a language model tool to produce search engine optimized content you might find it keyword stuffing or worse.

This can be even more noticeable when you copy and paste your keyword research into the tool and ask it to generate your article copy.

Remember, as well as algorithmic quality control, search engines such as Google also employ human beings who are out there looking for spam content. Better to use a light touch than over-optimize.

Do not use the arms race for content production to take your eye off the goal of both ranking and converting to sales with your articles.

Detecting AI-Generated Text

Now we have dealt with the most obvious and glaring mistakes, let’s get deeper into the weeds:

Repetition Instead of Understanding: Even though your article might appear coherent and contextually correct, AI tools can not currently provide a nuanced understanding of complex, ethical, or emotional issues.

Ask an AI tool what something was “like”, and it will either rely on a human’s written recollection or will provide only surface-level descriptions:

Compare that to someone who actually saw it in person:

Verbatim Repetition: AI models will repeat whole phrases or sentences verbatim, especially if the input prompts are not well crafted. Human-written, or at least human-edited, articles rarely do this because the phrases will jump out as unnatural and awkward.

Inconsistency: One human author will maintain their writing style from beginning to end of an article, so If the text changes its tone, argument, or standpoint without good reason, then it is likely machine-generated.

Waffling: AI-generated text can not make a clear standpoint. The tools are programmed to provide objective information without biases. Humans will leak their conscious or unconscious biases without thorough editing.

Filler Words: Sometimes, from time to time, AI might possibly overuse certain phrases or “filler” words to complete or pad out a sentence when the context is not entirely fully clear, if you get what I am saying? Can you see how this might possibly happen especially if you have asked for a certain word count requirement? Edit for brevity!

Unnatural Phrasing: Machine learning tools are good at grammar, many of us rely on software such as Grammarly. Language models, however, do sometimes produce text that seems … odd. At times that is the fault of the prompt, especially when we ask tools to be more creative than they are capable of. Make sure you read any generated text out loud before hitting publish.

Lack of Experience: AI-generated text can’t include actual personal anecdotes, and if they do provide them it is either plagiarism or hallucination and won’t read truthful.

Bloated Text: AI tools can be overly wordy, even for simple concepts. You can ask in your prompt for brevity but it is still worth having a human editor trim and polish before putting your article live.

Now we have beaten up our robot friends, what are some signs that a human was involved in the creation of your content?

Detecting if Articles Are Written by Humans

- Nuance: Humans are better at understanding and conveying complex ideas with empathy.

- Consistency: Humans are more consistent in their arguments and viewpoints and will rarely change their stand during an article, or even between articles, unless for good reason.

- Current: Humans will include the most current and up-to-date information because our databases are not time-locked to 2021.

- Personal: Humans add personal experiences and viewpoints. Google looks for this with their EEAT criteria (Expertise, Experience, Authority, and Trust).

- Quirks: Paradoxically, small mistakes or idiosyncratic language can indicate human writing because people are less likely to produce text that is “perfect”. Now, I do NOT suggest introducing errors to make Google think your AI articles are human-written because Google also has guidelines that your content should be “high quality”, but only people can write how they speak.

Conclusion

All this being said, it’s important to remember that these factors are just hints of a piece of text’s origin.

Advanced AI models and content generation tools are constantly improving and evolving, and human writers who are drawing from multiple research sources or who are employing grammar correction can also accidentally mimic the style of machine-generated text.

The best approach is to use the tools as just that, tools! Do not allow any piece of tool-generated content to be let loose without at least a human edit.